Academic studies aren’t going to top any “best summer reads” lists: They can be complicated, confusing, and well, pretty boring. But learning to read scientific research can help you answer important client questions and concerns… and provide the best evidence-based advice. In this article, we’ll help you understand every part of a study, and give you a practical, step-by-step system to evaluate its quality, interpret the findings, and figure out what it really means to you and your clients.

+++

Twenty-five years ago, the only people interested in studies were scientists and unapologetic, card-carrying nerds (like us).

But these days, everyone seems to care what the research says.

Because of that, we’re inundated with sensational headlines and products touting impressive sounding, “science-backed” claims.

Naturally, your clients (and mother) want to know which ones have merit, and which ones don’t.

They may want your take on an unbelievable new diet trend that’s “based on a landmark study.”

Maybe they’re even questioning your advice:

- “Aren’t eggs bad for you?”

- “Won’t fruit make me fat?”

- “Doesn’t microwaving destroy the nutrients?”

(No, no, and no.)

More importantly, they want to know why you, their health and fitness coach, are more believable than Dr. Oz, Goop, or that ripped social media star they follow (you know, the one with the little blue checkmark).

For health and fitness coaches, learning how to read scientific research can help make these conversations simpler and more well-informed.

The more you grow this skill set, the better you’ll be able to:

- Identify false claims

- Evaluate the merits of new research

- Give evidence-based advice

But where do you even begin?

Right here, with this step-by-step guide to reading scientific studies. Use it to improve your ability to interpret a research paper, understand how it fits into the broader body of research, and see the worthwhile takeaways for your clients (and yourself).

++++

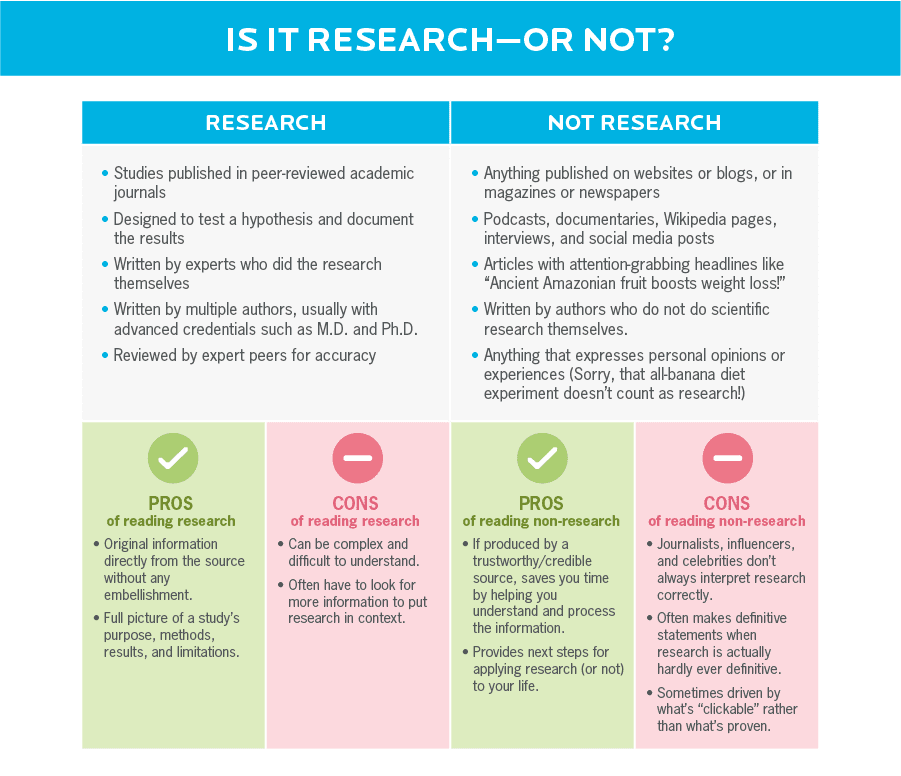

Know what counts as research, and what doesn’t.

People throw around the phrase, “I just read a study” all the time. But often, they’ve only seen it summarized in a magazine or on a website.

If you’re not a scientist, it’s okay to consult good-quality secondary sources for nutrition and health information. (That’s why we create Precision Nutrition content.) Practically speaking, there’s no need to dig into statistical analyses when a client asks you about green vegetables.

But for certain topics, and especially for emerging research, sometimes you’ll need to go straight to the original source.

Use the chart below to filter accordingly.

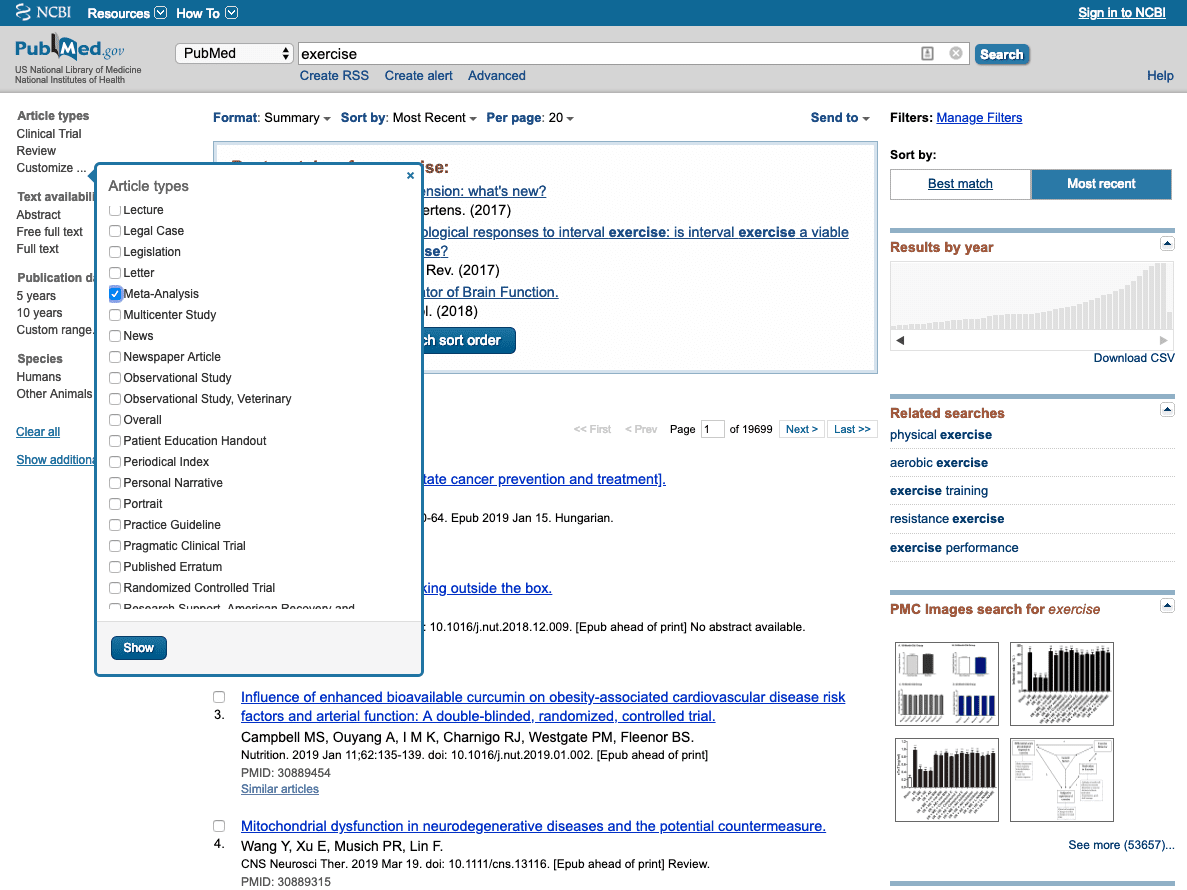

Okay, so how do you find the actual research?

Thanks to the internet, it’s pretty simple.

Online media sources reporting on research will often give you a link to the original study.

If you don’t have the link, search databases PubMed and Google Scholar using the authors’ names, journal name, and/or the study title.

(Totally lost? Check out this helpful PubMed tutorial for a primer on finding research online.)

If you’re having trouble finding a study, try searching the first, second, and last study authors’ names together. They rarely all appear on more than a handful of studies, so you’re likely to locate what you’re looking for.

You’ll almost always be able to read the study’s abstract—a short summary of the research—for free. Check to see if the full text is available, as well. If not, you may need to pay for access to read the complete study.

Once you’ve got your hands on the research, it’s time to dig in.

Not all research is created equal.

Be skeptical, careful, and analytical.

Quality varies greatly among publishers, journals, and even the scientific studies themselves.

After all, is every novel a Hemingway? Is every news outlet 100 percent objective? Are all your coworkers infallible geniuses?

Of course not. When it comes to achieving excellence, research has the same challenges as every other industry. For example…

Journals tend to publish novel findings.

Which sounds more interesting to read? A study that confirms what we already know, or one that offers something new and different?

Academic journals are businesses, and part of how they sell subscriptions, maintain their cutting-edge reputations, and get cited by other publications—and Good Morning America!—is by putting out new, attention-grabbing research.

As a result, some studies published in even the most well-respected scientific journals are one-offs that don’t mean all that much when compared to the rest of the research on that topic. (That’s one of many reasons nutrition science is so confusing.)

Researchers need to get published.

In order to get funding—a job requirement for many academics—researchers need to have their results seen. But getting published isn’t always easy, especially if their study results aren’t all that exciting.

Enter: predatory journals, which allow people to pay to have their research published without being reviewed. That’s a problem because it means no one is double-checking their work.

To those unfamiliar, studies published in these journals can look just like studies published in reputable ones. We even reviewed a study from one as an example, and we’ll tell you how to spot them on your own in a bit.

In the meantime, you can also check out this list of potentially predatory journals as a cross-reference.

Results can differ based on study size and duration.

Generally, the larger the sample size—the more people of a certain population who are studied—the more reliable the results (however at some point this becomes a problem, too).

The reason: With more people, you get more data. This allows scientists to get closer to the ‘real’ average. So a study population of 1,200 is less likely to be impacted by outliers than a group of, say, 10.

It’s sort of like flipping a coin: If you do it 10 times, you might get “heads” seven or eight times. Or even 10 in a row. But if you flip it 1,200 times, it’s likely to average out to an even split between heads and tails, which is more accurate.

One caveat: Sample size only matters when you’re comparing similar types of studies. (As you’ll learn later, experimental research provides stronger evidence than observational, but observational studies are almost always larger.)

For similar reasons, it’s also worth noting the duration of the research. Was it a long-term study that followed a group of people for years, or a single one-hour test of exercise capacity using a new supplement?

Sure, that supplement might have made a difference in a one-hour time window, but did it make a difference in the long run?

Longer study durations allow us to test the outcomes that really matter, like fat loss and muscle gain, or whether heart attacks occurred. They also help us better understand the true impact of a treatment.

For example, if you examine a person’s liver enzymes after just 15 days of eating high fat, you might think they should head to the ER. By 30 days, however, their body has compensated, and the enzymes are at normal levels.

So more time means more context, and that makes the findings both more reliable and applicable for real life. But just like studying larger groups, longer studies require extensive resources that often aren’t available.

The bottom line: Small, short-term studies can add to the body of literature and provide insights for future study, but on their own, they’re very limited in what you can take away.

Biases can impact study results.

Scientists can be partial to seeing certain study outcomes. (And so can you, as a reader.)

Research coming out of universities—as opposed to corporations—tends to be less biased, though this isn’t always the case.

Perhaps a researcher worked with or received funding from a company that has a financial interest in their studies’ findings. This is completely acceptable, as long as the researcher acknowledges they have a conflict or potential bias.

But it can also lead to problems. For example, the scientist might feel pressured to conduct the study in a certain way. This isn’t exactly cheating, but it could influence the results.

More commonly, researchers may inadvertently—and sometimes purposefully—skew their study’s results so they appear more significant than they really are.

In both of these cases, you might not be getting the whole story when you look at a scientific paper.

That’s why it’s critical to examine each study in the context of the entire body of evidence. If it differs significantly from the other research on the topic, it’s important to ask why.

Your Ultimate Study Guide

Now you’re ready for the fun part: Reading and analyzing actual studies, using our step-by-step process. Make sure to bookmark this article so you can easily refer to it anytime you’re reading a paper.

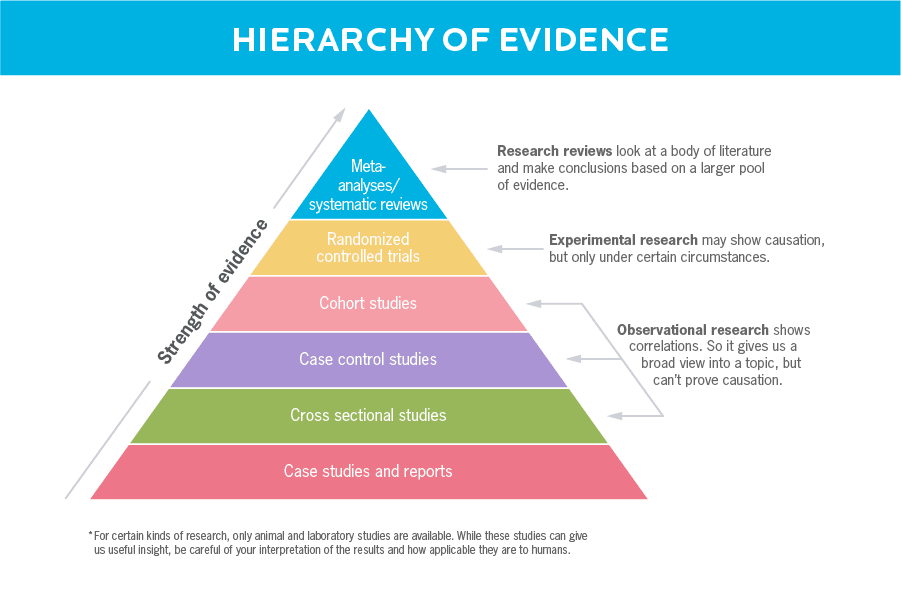

Step 1: Decide how strong the evidence is.

To determine how much stock you should put in a study, you can use this handy pyramid called the “hierarchy of evidence.”

Here’s how it works: The higher up on the pyramid a research paper falls, the more trustworthy the information.

For example, you ideally want to first look for a meta-analysis or systematic review—see the top of the pyramid—that deals with your research question. Can’t find one? Then work your way down to randomized controlled trials, and so on.

Study designs that fall toward the bottom of the pyramid aren’t useless, but in order to see the big picture, it’s important to understand how they compare to more vetted forms of research.

Research reviews

These papers are considered very strong evidence because they review and/or analyze a selection of past studies on a given topic. There are two types: meta-analyses and systematic reviews.

In a meta-analysis, researchers use complex statistical methods to combine the findings of several studies. Pooling together studies increases the statistical power, offering a stronger conclusion than any single study. Meta-analyses can also identify patterns among study results, sources of disagreement, and other interesting relationships that a single study can’t provide.

In a systematic review, researchers review and discuss the available studies on a specific question or topic. Typically, they use precise and strict criteria for what’s included.

Both of these approaches look at multiple studies and draw a conclusion.

This is helpful because:

- A meta-analysis or systematic review means that a team of researchers has closely scrutinized all studies included. Essentially, the work has already been done for you. Does each individual study make sense? Were the research methods sound? Does their statistical analysis line up? If not, the study will be thrown out.

- Looking at a large group of studies together can help put outliers in context. If 25 studies found that consuming fish oil improved brain health, and two found the opposite, a meta-analysis or systematic review would help the reader avoid getting caught up in the two studies that seem to go against the larger body of evidence.

PubMed has made these easy to find: to the left of the search box, just click “customize” and you can search for only reviews and meta-analyses.

If you’re reading a research review and things aren’t adding up for you, or you’re not sure how to apply what you’ve learned to your real-life coaching practice, seek out a position stand on the topic.

Position stands are official statements made by a governing body on topics related to a particular field, like nutrition, exercise physiology, dietetics, or medicine.

They look at the entire body of research and provide practical guidelines that professionals can use with clients or patients.

Here’s an example: The 2017 International Society of Sports Nutrition Position Stand on diets and body composition.

Or, say you have a client who’s older and you’re wondering how to safely increase their training capacity (but don’t want to immerse yourself in a dark hole of research), simply look for the position stand on exercise and older adults.

To find the position stands in your field, consult the website of whatever governing body you belong to. For example, if you’re a personal trainer certified through ACSM, NASM, ACE, or NSCA, consult the respective website for each organization. They should feature position stands on a large variety of topics.

Randomized controlled trials

This is an experimental study design: A specific treatment is given to a group of participants, and the effects are recorded. In some cases, this type of study can prove that a treatment causes a certain effect.

In a randomized controlled trial, or RCT, one group of participants doesn’t get the treatment being tested, but both groups think they’re getting the treatment.

For instance, one half of the participants might take a drug, while the other half gets a placebo.

The groups are chosen randomly, and this helps to counteract the placebo effect—which occurs when someone experiences a benefit simply because they believe it’ll help.

If you’re reading a RCT paper, look for the words “double blind” or the abbreviation “DBRCT” (double blind randomized controlled trial). This is the gold standard of experimental research. It means neither the participants nor researchers know who’s taking the treatment and who’s taking the placebo. They’re both “blind”—so the results are less likely to be skewed.

Observational studies

In an observational study, researchers look at and analyze ongoing or past behavior or information, then draw conclusions about what it could mean.

Observational research shows correlations, which means you can’t take an observational study and say it “proves” anything. But even so, when folks hear about these findings on the popular morning shows, that part’s often missed, which is why you might end up with confused clients.

So what’re these types of studies good for? They can help us make educated guesses about best practices.

Again, one study doesn’t tell us a lot. But if multiple observational studies show similar findings, and there are biological mechanisms that can reasonably explain them, you can be more confident they’ve uncovered a pattern. Like that eating plant foods is probably healthful—or that smoking probably isn’t.

Scientists can also use these studies to generate hypotheses to test in experimental studies.

There are three main types of observational studies:

- Cohort studies follow a group of people over a certain period of time. In fact, these studies can track people for years or even decades. Usually, the scientists are looking for a specific factor that might affect a given outcome. For example, researchers start with a group of people who don’t have diabetes, then watch to see which people develop the disease. Then they’ll try to connect the dots, and determine which factors the newly-diagnosed people have in common.

- Case control studies compare the histories of two sets of people that are different in some way. For example, the researchers might look at two groups who lost 30 pounds: 1) those who successfully maintained their weight loss over time; 2) those who didn’t. This type of study would suggest a reason why that happened and then analyze data from the participants to see if might be true.

- Cross sectional studies use a specific population—say, people with high blood pressure—and look for additional factors they might have in common with each other. This could be medications, lifestyle choices, or other conditions.

Case studies and reports

These are basically stories that are interesting or unusual in some way. For examples, this study reviewed the case of a patient who saw his blood cholesterol levels worsen significantly after adding 1-2 cups of Bulletproof Coffee to his daily diet.

Case studies and reports might provide detail and insight that would be hard to share in a more formal study design, but they’re not considered the most convincing evidence. Instead, they can be used to make more informed decisions and provide ideas about where to go next.

Animal and laboratory studies

These are studies done on non-human subjects—for instance, on pigs, rats, or mice, or on cells in Petri dishes—and can fall anywhere within the hierarchy.

Why are we mentioning them? Mainly, because it’s important to be careful with how much stock you put in the results. While it’s true that much of what we know about human physiology—from thermal regulation to kidney function—is thanks to animal and lab studies, people aren’t mice, or fruit flies, or even our closest relatives, primates.

So animal and cell studies can suggest things about humans, but aren’t always directly applicable.

The main questions you’ll want to answer here are: What type of animal was used? Were the animals used a good model for a human?

For example, pigs are much better models for research on cardiovascular disease and diets compared to mice, because of the size of their coronary arteries and their omnivorous diets. Mice are used for genetic studies, as they’re easier to alter genetically and have shorter reproduction cycles.

Also, context really matters. If an ingredient is shown to cause cancer in an animal study, how much was used, and what’s the human equivalent?

Or, if a chemical is shown to increase protein synthesis in cells grown in a dish, then for how long? Days, hours, minutes? To what degree, and how would that compare to a human eating an ounce of chicken? What other processes might this chemical impact?

Animal and lab studies usually don’t provide solutions and practical takeaways. Instead, they’re an early step in building a case to do experimental research.

The upshot: You need to be careful not to place more importance on these findings than they deserve. And, as always, look at how these small studies fit into the broader picture of what we already know about the topic.

Bonus: Qualitative and mixed-method studies

We haven’t mentioned one research approach that cuts across many study designs: qualitative research, as opposed to quantitative (numeric) research.

Qualitative studies focus on the more intangible elements of what was found, such as what people thought, said, or experienced. They tell us about the human side of things.

So, a qualitative study looking at how people respond to a new fitness tracker might ask them how they feel about it, and gather their answers into themes such as “ease of use” or “likes knowing how many steps taken.”

Qualitative studies are often helpful for exploring ideas and questions that quantitative data raises.

For example, quantitative data might tell us that a certain percentage of people don’t make important health changes even after a serious medical diagnosis.

Qualitative research might find out why, by interviewing people who didn’t make those changes, and seeing if there were consistent themes, such as: “I didn’t get enough info from my doctor” or “I didn’t get support or coaching.”

When a study combines quantitative data with qualitative research, it’s known as a “mixed-methods” study.

Your takeaway: Follow the hierarchy of evidence.

There’s a big difference between a double blind randomized controlled human trial on the efficacy of a weight loss supplement (conducted by an independent lab) and an animal study on that same supplement.

There’s an even bigger difference between a systematic review of studies on whether red meat causes cancer and a case report on the same topic.

When you’re looking at research, keep results in perspective by taking note of how strong the evidence can even be, based on the pyramid above.

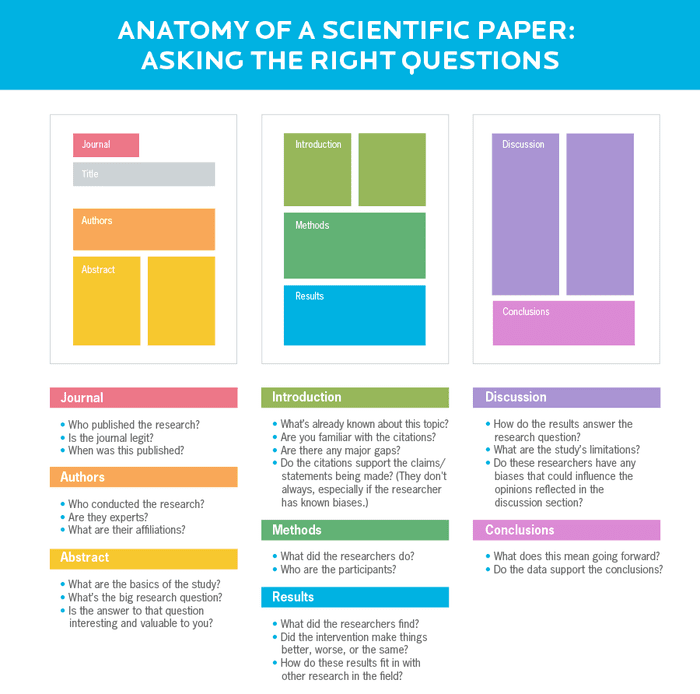

Step 2: Read the study critically.

Just because a study was published doesn’t mean it’s flawless. So while you might feel a bit out of your depth when reading a scientific paper, it’s important to remember that the paper’s job is to convince you of its evidence.

And your job when you’re reading a study is to ask the right questions.

Here’s exactly what to look for, section by section.

Journal

High quality studies are published in academic journals, which have names like Journal of Strength and Conditioning Research, not TightBodz Quarterly.

To see if the study you’re reading is published in a reputable journal:

- Check the impact factor. While not a perfect system, using a database like Scientific Journal Rankings to look for a journal’s “impact factor” (identified as “SJR” by Scientific Journal Rankings) can provide an important clue about a journal’s reputation. If the impact factor is greater than one, it’s likely to be legit.

- Check if the journal is peer-reviewed. Peer-reviewed studies are read critically by other researchers before being published, lending them a higher level of credibility. Most journals state whether they require peer review in their submission requirements, which can generally be found by Googling the name of the journal and the words “submission guidelines.” If a journal doesn’t require peer review, it’s a red flag.

- See how long the publisher has been around. Most reputable academic journals are published by companies that have been in business since at least 2012. Publishers that have popped up since then are more likely to be predatory.

Authors

These are the people who conducted the research, and finding out more about their backgrounds can tell you a lot about how credible a study might be.

To learn more about the authors:

- Look them up. They should be experts in the field the study deals with. That means they’ve contributed research reviews and possibly textbook chapters on this topic. Even if the study is led by a newer researcher in the field, you should be able to find information about their contributions, credentials, and areas of expertise on their university or lab website.

- Check out their affiliations. Just like you want to pay attention to any stated conflicts of interest, it’s smart to be aware if any of the authors make money from companies that have an interest in the study’s findings.

Note: It doesn’t automatically mean a study is bogus if one (or more) of the authors make money from a company in a related industry, but it’s worth noting, especially if there seem to be other problems with the study itself.

Abstract

This is a high-level summary of the research, including the study’s purpose, significant results, and the authors’ conclusions.

To get the most from the abstract, you want to:

- Figure out the big question. What were the researchers trying to find out with this study?

- Decide if the study is relevant to you. Move on to the later parts of the study only if you find the main question interesting and valuable. Otherwise, there’s no reason to spend time reading it.

- Dig deeper. The abstract doesn’t provide context, so in order to understand what was discovered in a study, you need to keep reading.

Introduction

This section provides an overview of what’s already known about a topic and a rationale for why this study needed to be done.

When you read the introduction:

- Familiarize yourself with the subject. Most introductions list previous studies and reviews on the study topic. If the references say things that surprise you or don’t seem to line up with what you already know about the body of evidence, get up to speed before moving on. You can do that by either reading the specific studies that are referenced, or reading a comprehensive (and recent) review on the topic.

- Look for gaps. Some studies cherry-pick introduction references based on what supports their ideas, so doing research of your own can be revealing.

Methods

You’ll find demographic and study design information in this section.

All studies are supposed to be reproducible. In other words, another researcher following the same protocols would likely get the same results. So this section provides all the details on how you could replicate a study.

In the methodology section, you’ll want to:

- Learn about the participants. Knowing who was studied can tell you a bit about how much (or how little) you can apply the study results to you (or your clients). Women may differ from men; older subjects may differ from younger ones; groups may differ by ethnicity, medical conditions may affect the results, and so on.

- Take note of the sample size. Now is also a good time to look at how many participants the study included, as that can be an early indicator of how seriously you can take the results, depending on the type of study.

- Don’t get bogged down in the details. Unless you work in the field, it’s unlikely that you’ll find value in getting into the nitty-gritty of how the study was performed.

Results

Read this section to find out if the intervention made things better, worse, or… the same.

When reading this section:

- Skim it. The results section tends to be dense. Reading the headline of each paragraph can give you a good overview of what happened.

- Check out the figures. To get the big picture of what the study found, seek to understand what’s being shown in the graphs, charts, and figures in this section.

Discussion

This is an interpretation of what the results might mean. Key point: It includes the authors’ opinions.

As you read the discussion:

- Note any qualifiers. This section is likely to be filled with “maybe,” “suggests,” “supports,” “unclear,” and “more studies need to be done.” That means you can’t cite ideas in this section as fact, even if the authors clearly prefer one interpretation of the results over another. (That said, be careful not to dismiss the interpretation offhand, particularly if the author has been doing this specific research for years or decades.)

- Acknowledge the limitations. The discussion also includes information about the limits of how the research can be applied. Diving deep into this section is a great opportunity for you to better understand the weaknesses of the study and why it might not be widely applicable (or applicable to you and/or your clients.)

Conclusions

Here, the authors sum up what their research means, and how it applies to the real world.

To get the most from this section:

- Consider reading the conclusions first. Yes—before the intro, methodology, results, or anything else. This helps keep the results of the study in perspective. After all, you don’t want to read more into the outcome of the study than the people who actually did the research, right? Starting with the conclusions can help you avoid getting over-excited about a study’s results—or more convinced of their importance—than the people who conducted it.

- Make sure the data support the conclusions. Sometimes, authors make inappropriate conclusions or overgeneralize results, like when a scientist studying fruit flies applies the results to humans, or when researchers suggest that observational study results “prove” something to be true (which as you know from the hierarchy of evidence, isn’t possible). Look for conclusions that don’t seem to add up.

Before researchers start a study, they have a hypothesis that they want to test. Then, they collect and analyze data and draw conclusions.

The concept of statistical significance comes in during the analysis phase of a study.

In academic research, statistical significance, or the likelihood that the study results were generated by chance, is measured by a p-value that can range from 0 to 1 (0 percent chance to 100 percent chance).

The “p” in p-value is probability.

P-values are usually found in the results section.

Put simply, the closer the p-value is to 0, the more likely it is that the results of a study were caused by the treatment or intervention, rather than random fluke.

For example:

Let’s say researchers are testing fat loss supplement X.

Their hypothesis is that taking supplement X results in greater fat loss than not taking it.

The study participants are randomly divided into two groups:

- One group takes supplement X.

- One group takes a placebo.

At the end of the study, the group that took supplement X, on average, lost more fat. So it would seem that the researchers’ hypothesis is valid.

But there’s a catch: Some people with supplement X lost less weight than those who took the placebo. So does supplement X help with fat loss or not?

This is where statistics and p-values come in. If you look at all the participants and how much fat they lost, you can figure out if it’s likely due to the supplement or just the randomness of the universe.

The most common threshold is a p-value under 0.05 (5 percent), which is considered statistically significant. Numbers over that threshold are not.

This threshold is arbitrary, and some types of analysis have a much lower threshold, such as genome-wide association studies that need a p-value of less than 0.00000001 to be statistically significant.

So if the researchers studying supplement X find that their p-value is 0.04, that means: 1) There’s a very small chance (4 percent) that supplement X has no effect on fat loss, and 2) there’s a 96 percent chance of getting the same results (or greater) if you replicated the study.

A couple of important things to note about p-values:

- The smaller the p-value does NOT mean the bigger the impact of supplement X. It just means the effect is consistent and likely ‘real.’

- The p-value doesn’t test for how well a study is designed. It just looks at how likely the results are due to chance.

Why are we explaining this in such detail?

Because if you see a study that cites a p-value of higher than 0.05, the results aren’t statistically significant.

That means either 1) the treatment had no effect, or 2) if the study were repeated, the results would be different.

So in the case of supplement X, if the p-value were higher than 0.05, you couldn’t say that supplement X helped with fat loss. This is true even if you can see that, on average, the group taking supplement X lost 10 pounds of fat. (You can learn more here.)

The takeaway: Ask the right questions.

We’re not saying you should read a study critically because researchers are trying to trick you.

But each section of a study can tell you something important about how valid the results are, and how seriously you should take the findings.

If you read a study that concludes green tea speeds up your metabolism, and:

- the researchers have never studied green tea or metabolism before;

- the researchers are on the board of a green tea manufacturer;

- the introduction fails to cite recent meta-analyses and / or reviews on the topic that go against the study’s results;

- and the study was performed on mice…

… then you should do some further research before telling people that drinking green tea will spike their metabolism and accelerate fat loss.

This isn’t to say green tea can’t be beneficial for someone trying to lose weight. After all, it’s a generally healthful drink that doesn’t have calories. It’s just a matter of keeping the research-proven benefits in perspective. Be careful not to overblow the perks based on a single study (or even a few suspect ones).

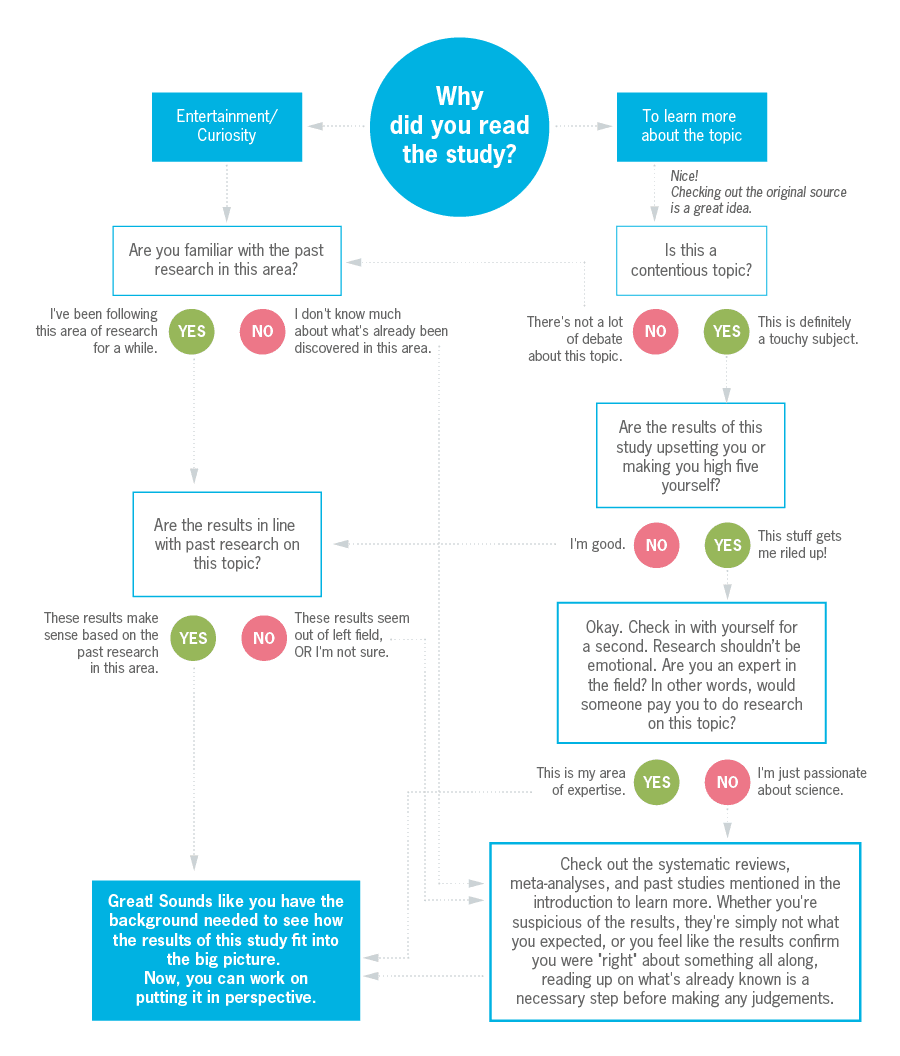

Step 3: Consider your own perspective.

So you’ve read the study and have a solid idea of how convincing it really is.

But beware:

We tend to seek out information we agree with.

Yep, we’re more likely to click on (or go searching for) a study if we think it will align with what we already believe.

This is known as confirmation bias.

And if a study goes against what we believe, well, we might just find ourselves feeling kind of ticked off.

You will bring some biases to the table when you read and interpret a study. All of us do.

But the truth is, not everyone should be drawing conclusions from scientific studies on their own, especially if they’re not an expert in the field. Because again, we’re all a little bit biased.

Once you’ve read a study, use this chart to determine how you should approach interpreting the results.

The takeaway: Be aware of your own point of view.

Rather than pretending you’re “objective” and “logical,” recognize that human brains are inherently biased.

A warning sign of this built-in bias: if you’re feeling especially annoyed or triumphant after reading a study.

Remember, science isn’t about being right or wrong; it’s about getting closer to the truth.

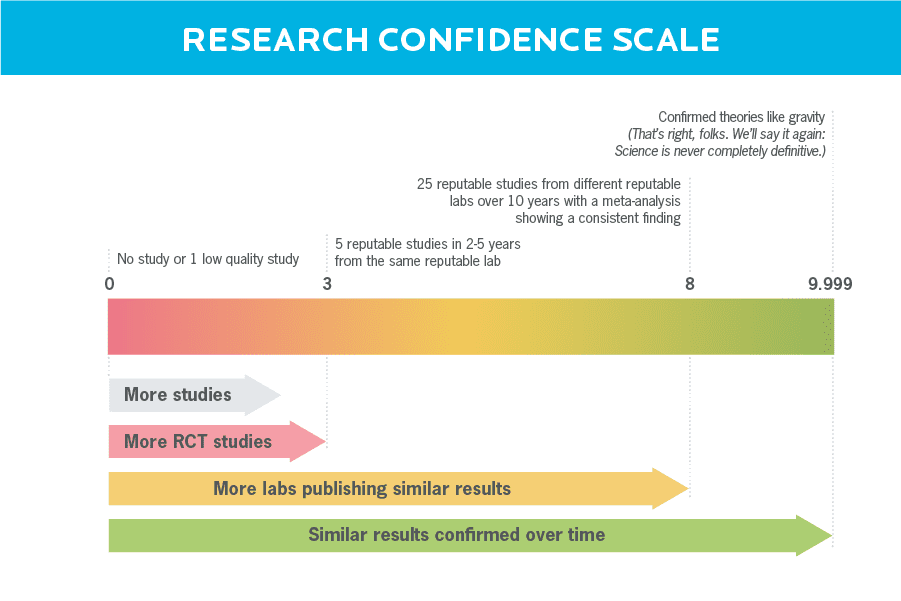

Step 4: Put the conclusions in context.

One single study on its own doesn’t prove anything. Especially if it flies in the face of what we knew before.

(Rarely, by the way, will a study prove anything. Rather, it will add to a pile of probability about something, such as a relationship between Factor X and Outcome Y.)

Look at new research as a very small piece of a very large puzzle, not as stand-alone gospel.

That’s why we emphasize position stands, meta-analyses, and systematic reviews. To some degree, these do the job of providing context for you.

If you read an individual study, you’ll have to do that work on your own.

For each scientific paper you read, consider how it lines up with the rest of the research on a given topic.

The takeaway: Go beyond the single study.

Let’s say a study comes out that says creatine doesn’t help improve power output. The study is high quality, and seems well done.

These results are pretty strange, because most of the research on creatine over the past few decades shows that it does help people boost their athletic performance and power output.

So do you stop taking creatine, one of the most well-researched supplements out there, if your goal is to increase strength and power?

Well, it would be pretty silly to disregard the past 25 years of studies on creatine supplementation just because of one study.

Instead, it probably makes more sense to take this study and set it aside—at least until more high-quality studies replicate a similar result. If that happens, then we might take another look at it.

Getting the most out of scientific research, and potentially applying it to our lives, is more about the sum total than the individual parts.

Science definitely isn’t perfect, but it’s the best we’ve got.

It’s awesome to be inspired by science to experiment with your nutrition, fitness, and overall health routines or to recommend science-based changes to your clients.

But before making any big changes, be sure it’s because it makes sense for you (or your client) personally, not just because it’s the Next Big Thing.

Take notice of how the changes you make affect your body and mind, and when something isn’t working for you (or your client), go with your gut.

Science is an invaluable tool in nutrition coaching, but we’re still learning and building on knowledge as we go along. And sometimes really smart people get it wrong.

Take what you learn from research alone with a grain of salt.

And if you consider yourself an evidence-based coach (or a person who wants to use evidence-based methods to get healthier), remember that personal experiences and preferences matter, too.

If you’re a coach, or you want to be…

Learning how to coach clients, patients, friends, or family members through healthy eating and lifestyle changes—in a way that’s evidence-based and personalized for each individual’s lifestyle and preferences—is both an art and a science.

If you’d like to learn more about both, consider the Precision Nutrition Level 1 Certification. The next group kicks off shortly.

What’s it all about?

The Precision Nutrition Level 1 Certification is the world’s most respected nutrition education program. It gives you the knowledge, systems, and tools you need to really understand how food influences a person’s health and fitness. Plus the ability to turn that knowledge into a thriving coaching practice.

Developed over 15 years, and proven with over 100,000 clients and patients, the Level 1 curriculum stands alone as the authority on the science of nutrition and the art of coaching.

Whether you’re already mid-career, or just starting out, the Level 1 Certification is your springboard to a deeper understanding of nutrition, the authority to coach it, and the ability to turn what you know into results.

[Of course, if you’re already a student or graduate of the Level 1 Certification, check out our Level 2 Certification Master Class. It’s an exclusive, year-long mentorship designed for elite professionals looking to master the art of coaching and be part of the top 1 percent of health and fitness coaches in the world.]

Interested? Add your name to the presale list. You’ll save up to 33% and secure your spot 24 hours before everyone else.

We’ll be opening up spots in our next Precision Nutrition Level 1 Certification on Wednesday, October 2nd, 2019.

If you want to find out more, we’ve set up the following presale list, which gives you two advantages.

- Pay less than everyone else. We like to reward people who are eager to boost their credentials and are ready to commit to getting the education they need. So we’re offering a discount of up to 33% off the general price when you sign up for the presale list.

- Sign up 24 hours before the general public and increase your chances of getting a spot. We only open the certification program twice per year. Due to high demand, spots in the program are limited and have historically sold out in a matter of hours. But when you sign up for the presale list, we’ll give you the opportunity to register a full 24 hours before anyone else.

If you’re ready for a deeper understanding of nutrition, the authority to coach it, and the ability to turn what you know into results… this is your chance to see what the world’s top professional nutrition coaching system can do for you.

References

Click here to view the information sources referenced in this article.

The post What’s that study REALLY say? How to decode research, according to science nerds. appeared first on Precision Nutrition.

from Blog – Precision Nutrition https://www.precisionnutrition.com/how-to-read-scientific-research

via Holistic Clients

No comments:

Post a Comment